- research

- ai-ml

- reproducibility

- replicability

- repeatability

Demystifying AI Reproducibility: A Key to Innovation

In the rapidly expanding universe of Artificial Intelligence (AI) and Machine Learning (ML), the community faces a significant hurdle: ensuring the reproducibility of groundbreaking research. The influx of submissions to leading conferences has highlighted the vitality and swift progress within these fields. However, this growth has been shadowed by a reproducibility crisis, where researchers often struggle to recreate results from studies, be it the work of others or even their own. This challenge not only raises questions about the reliability of research findings but also points to a broader issue within the scientific process in AI/ML.

A slew of studies and surveys underscore the gravity of the situation. Instances where attempts to re-execute experiments led to a wide array of results—even under identical conditions—illustrate the unpredictable nature of current research practices. Factors such as the under-reporting of experiment details and external randomness contribute to these challenges, emphasizing the urgent need for a more systematic, transparent approach. The confusion is exacerbated by the varied use of key terms like reproducibility, replicability, and repeatability, muddling efforts to address these issues effectively.

As AI and ML continue to promise revolutionary changes across industries, the imperative to ensure that research is not just innovative but also reproducible has never been clearer. Tackling this crisis will require a collective shift towards more rigorous validation methods, clearer communication, and an unwavering commitment to scientific integrity.

Untangling the Terminology in AI/ML Research

In addressing AI and ML's reproducibility crisis, the confusion around key terms—'repeatability', 'reproducibility', and 'replicability'—stands as a significant hurdle. A surprising number of researchers admit difficulty in distinguishing between these concepts, muddying efforts to ensure research integrity. Adding to the confusion, the scientific community is split over the definitions of 'reproducibility' and 'replicability', with each camp using these terms inversely. This terminological mix-up complicates the path to resolving the reproducibility crisis, underlining the need for consensus on the language used to discuss validation in AI/ML research.

Defining Reproducibility in AI/ML Research

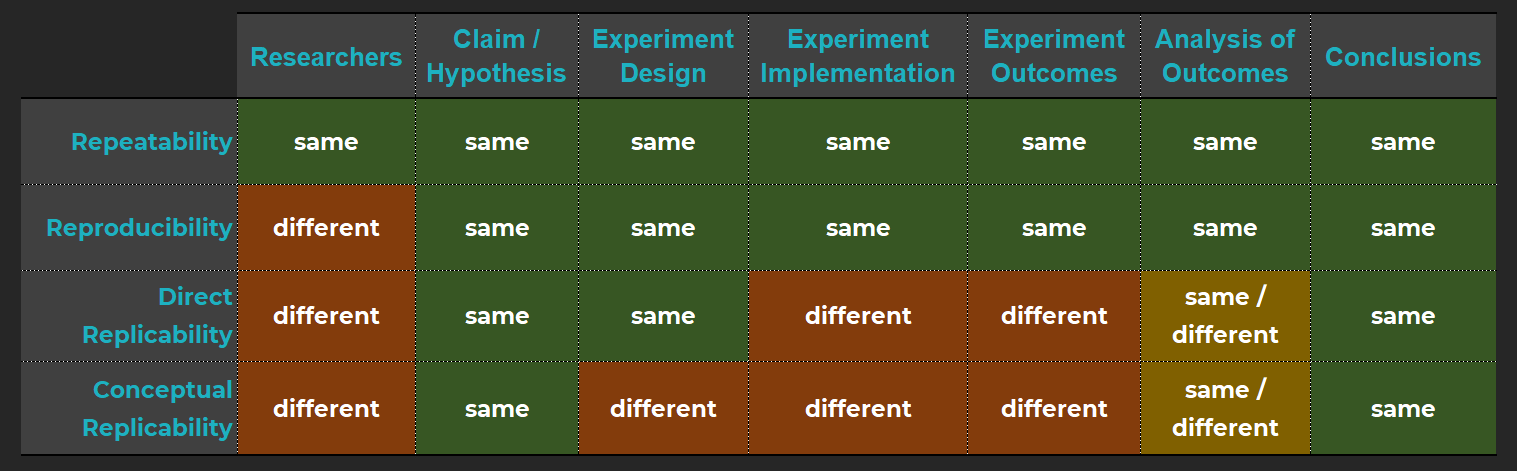

At Ready Tensor, we define the key terms in AI/ML research validation as follows:

Repeatability: focuses on the consistency of research outcomes when the original team reexecutes their experiment under unchanged conditions. It underscores the reliability of the findings within the same operational context.

Reproducibility: is achieved when independent researchers validate the original experiment's findings by employing the documented experimental setup. This validation can be direct, utilizing the original data and code, or independent, through the reimplementation of the experiment, thereby testing the findings' reliability across different teams.

Direct Replicability: involves an independent team's effort to validate the original study's results by intentionally altering the experiment's implementation, yet keeping the hypothesis and experimental design consistent. This variation can include changes in datasets, methodologies, or analytical approaches, aiming to affirm the original conclusions under slightly modified conditions.

Conceptual Replicability: extends the validation process by testing the same hypothesis through an entirely new experimental approach. This involves a significant departure from the original study's design to validate the hypothesis in broader or different contexts, thereby ensuring the findings' applicability across varied experimental setups.

Incorporating these definitions into AI/ML research practices not only enhances the rigor and reliability of the findings but also fosters a culture of transparency and accountability.

Pathways to Achieving Reproducibility

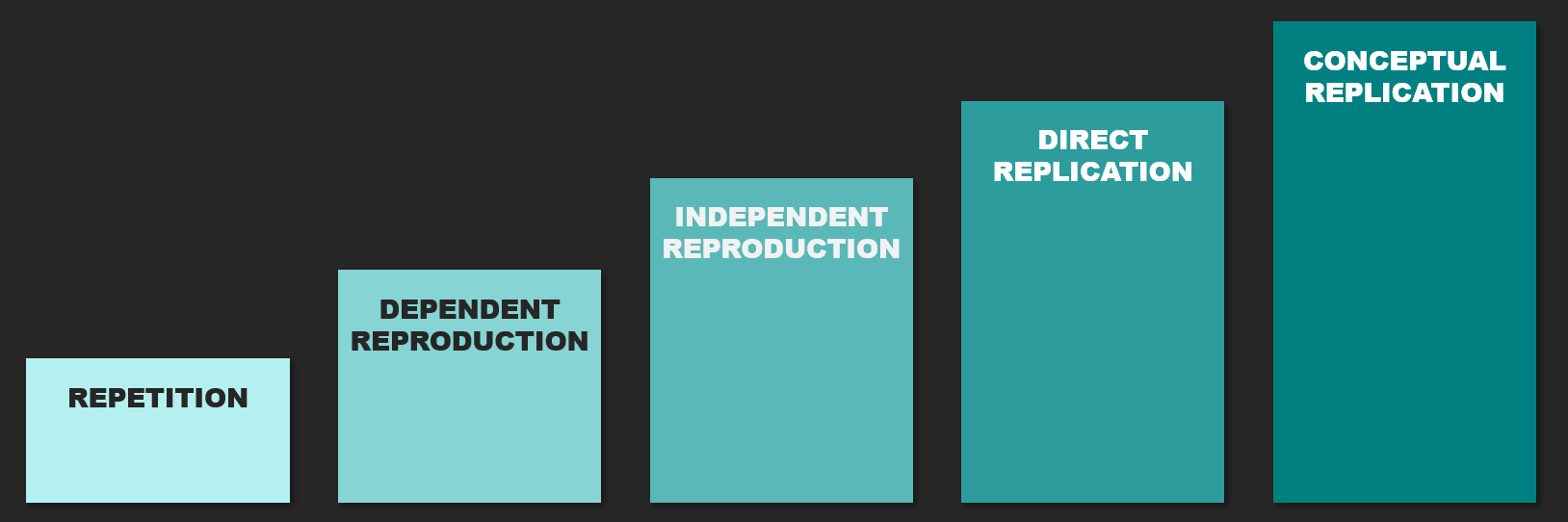

There are two main pathways to pursue a reproducibility study:

Dependent Reproducibility Involves using the original study's code and data for recreation of the results. This approach, while less rigorous, is contingent on the availability of the original artifacts.

Independent Reproducibility Entails a researcher independently reimplementing the experiment based on the published descriptions, without reliance on the original code and data. This method represents a more stringent test of reproducibility, as it necessitates a deeper engagement with the original study's methodology.

Independent reproduction stands as a more rigorous validation method compared to dependent reproduction due to its requirement for the reproducer to independently parse and execute the original experiment's design. This method deepens the validation process, making it more probable to identify flaws or limitations in the original findings than when relying on dependent reproduction.

Implications for Scientific Rigor and Reliability

The hierarchy of validation studies, spanning from repeatability to conceptual replicability, significantly impacts scientific rigor and reliability.

Repeatability ensures the initial integrity of findings, confirming that results are consistent and not random. It's a fundamental check for any study's internal consistency.

Dependent and independent reproducibility elevate this scrutiny. Dependent reproducibility confirms the accuracy of results using the same materials, while independent reproducibility tests if findings can be replicated with different tools, assessing the experiment's description for clarity and completeness.

Direct and conceptual replicability are at the hierarchy's peak, offering the highest scrutiny levels. Direct replicability examines the stability of findings across methodologies, whereas conceptual replicability assesses the generalizability of conclusions in new contexts.

This progression from repeatability to replicability not only enhances trust in the findings but also tests their robustness and applicability under varied conditions, thus increasing scientific rigor and understanding of the research's scope and limitations.

Towards a Reproducible Future in AI/ML Research

In addressing the reproducibility crisis in AI/ML, we've defined key concepts like repeatability, reproducibility, and replicability to clarify their roles in scientific trust. This article emphasizes the need for findings to be robustly replicable beyond mere repeatability and reproducibility.

At Ready Tensor, our platform embodies this commitment, designed to enhance reproducibility and encourage collaboration among researchers. By prioritizing open, transparent research, we aim to advance the field with dependable knowledge, striving for innovations that are not only groundbreaking but also universally trusted.